The RelocDB dataset will be available for download very soon. Please sign up below to be notified when it becomes available:

Overview

Current RGB-D datasets are either relatively small, limited in ground-truth labelling, or rely heavily on synthetic data. As algorithms become more sophisticated and performance gains saturate on these datasets, it becomes more difficult to benchmark and judge advancements in algorithm design. As such, there is a growing need for a large-scale datasets with high-quality labelling.

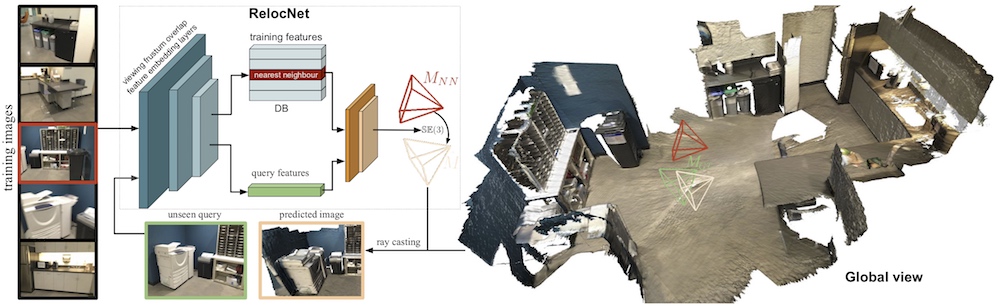

To fill this need, we introduce RelocDB, a large indoor scene RGB-D dataset obtained using the latest Google Tango motion capture devices. This allows it to possess state-of-the-art 6D sensor pose accuracy, which can be used build and evaluate algorithms on multi-view geometry such as camera motion tracking, 3D model reconstruction, and pose relocalisation.

The dataset comprises of 500+ sequences of RGB-D data captured using a Google Tango-capable device. A typical sequence contains 2 rounds of indoor scanning: the first round of data can be used to create a reference map, while the second can be used to evaluate a relocalisation algorithm. The sequences capture huge varieties of the indoor lighting and textures, and is ideal for evaluating the method we proposed in our work RelocNet.

Format

The Tango device is capable of capturing a stream of 640 x 360 RGB images at about 30 fps, and a stream of 224x172 point cloud images at about 5 fps.

The 6 DoF camera poses of both RGB and depth frames are provided by the capture device. The format of the dataset is shown below:

.\sequnce_index\

rgb\timestamp.ppm -> ppm format color images with timestamps as file names

points\timestamp.exr -> exr format point cloud with timestamps as file names

calib.txt -> the camera internal parameters

depth_trajectory.txt -> the camera poses for each frame of the captured point cloud

rgb_trajectory.txt -> the camera poses for each frame of the RGB image

points.txt -> the timestamps associated with corresponding .exr files

rgb.txt -> the timestamps associated with RGB images

The .exr files store 3D coordinates for each pixel location. The coordinates are floating-point numbers (x,y,z), defined with respect to the current camera pose.

The internal camera parameters (intrinsics) are organized as follows:

640 360 -> RGB image resolution

491.179446 490.353338 -> focal length f_x and f_y

328.954618 177.982037 -> the principal point c_x and c_y with the 2-D coordinate defined as the OpenCV format.

224 172 -> .exr point cloud file resolution

217.430000 217.430000 -> focal length of the depth camera f_x and f_y

111.960000 84.248800 -> the principal point c_x and _cy for the depth camera

0.999991 0.004030 -0.000879 0.000247 -> 3 x 4 matrix [ R | t ] the transformation

-0.004021 0.999947 0.009447 -0.011762 depth camera coordinate

0.000917 -0.009443 0.999955 0.007880 to RGB coordinate system